Romania's November-December 2024 presidential elections became the theatre of one of Europe's most sophisticated electoral interference operations, culminating in the unprecedented Constitutional Court annulment of the first round results. This forensic investigation documents the mechanisms, actors, and strategic objectives of a coordinated campaign that combined cyber attacks, algorithmic manipulation, and cross-platform disinformation to destabilize a NATO and EU member state.

Călin Georgescu, an obscure candidate polling at 5-10%, achieved a shocking 22.9% in the first round, propelled by 614 identified hostile networks operating across 5 digital platforms and originating from 50 countries. Through systematic analysis of 3,585 messages, cross-platform coordination patterns, state-affiliated actor networks comprising 99 Russian entities, and temporal manipulation dynamics, this study reveals the operational architecture of contemporary hybrid warfare targeting democratic processes.

The investigation establishes that this operation represents methodical destabilization aimed at fracturing Euro-Atlantic cohesion, weakening regional resilience, and undermining support for Ukraine. The December 6, 2024 Constitutional Court decision to annul the election, while preserving democratic integrity, demonstrates both the severity of the threat and the unprecedented nature of the interference documented herein.

- The Călin Georgescu Anomaly: From Obscurity to 22.9%

- Research Contributions & Data

- Operational Timeline: Chronology of Destabilization

- Cross-Platform Architecture: Telegram, TikTok, and Ecosystem Integration

- State-Affiliated Actors and Narrative Laundering Networks

- Tactical Methods: Coordination, Amplification, and Burst Activity

- Behavioral Anomalies: Temporal Patterns and Detection Signatures

- In Focus: Facebook Coordinated Networks

- Constitutional Intervention: The December 6 Decision

- Implications for Democratic Resilience in NATO States

- Additional Resources

The Călin Georgescu Anomaly

On November 24, 2024, Romania's first round of presidential elections produced a result that defied all pre-election polling: Călin Georgescu, a candidate consistently measuring 5-10% in surveys, secured 22.9% of votes, finishing first and advancing to the runoff. This 12-17 percentage point divergence from polling data represented not mere forecasting error but evidence of systematic manipulation through coordinated digital amplification.

Georgescu's campaign operated with minimal traditional infrastructure—no established party apparatus, limited ground operations, negligible mainstream media presence. Yet his digital footprint exploded in the final weeks before voting, achieving reach that surpassed candidates with vastly superior resources. This discrepancy between offline invisibility and online dominance provided the first clear indicator of coordinated external amplification.

Investigation by the Osavul platform—an AI-powered threat detection system developed in collaboration with the European Commission, NATO Strategic Communications, and multiple European governments—identified 614 hostile networks coordinating Georgescu's amplification. Analysis of 3,585 messages across 5 online mediums revealed sophisticated cross-platform integration rarely seen in electoral manipulation campaigns, with coordinated activity spanning Telegram, TikTok, Facebook, X, and web domains.

This investigation employed multi-dimensional analysis combining semantic clustering to identify coordinated messaging patterns, temporal correlation analysis detecting synchronized posting behaviors, network topology mapping revealing coordination structures, cross-platform tracking following narrative migration, and state-actor attribution through established intelligence frameworks. Data sources included platform APIs, public archives, declassified intelligence reports, and collaborative threat intelligence sharing with European security institutions. The dataset comprises 3,585 messages analyzed across temporal, semantic, and network dimensions, with 8,892 additional messages examined for inauthentic behavior patterns.

The scale of bot deployment proved particularly striking. Analysis identified approximately 25,000 TikTok accounts actively promoting Georgescu content, of which 800 displayed a remarkable pattern: accounts created in 2016 but dormant for years, suddenly activated in coordinated fashion during the campaign's final weeks. This reactivation pattern indicates pre-positioned infrastructure—accounts established years earlier, maintained in reserve, then deployed when needed to evade platform detection systems designed to flag newly created coordinated networks.

The temporal dimension of the campaign reveals operational sophistication. Rather than maintaining constant baseline activity, the networks deployed burst campaigns—concentrated periods of high-intensity posting designed to achieve algorithmic amplification and trending status. These bursts corresponded precisely with key campaign moments, suggesting human-in-the-loop coordination responsive to real-time developments rather than fully automated bot operations.

Research Contributions & Data

Conducted by Andra-Lucia Martinescu et alii and published by The Foreign Policy Centre (a London-based think tank), the 'Networks of Influence' research deployed the AI-powered information threat detection software, Osavul, to investigate the intricate cross-platform landscape of online manipulation and interference surrounding Romania's 2024 presidential elections. It explores in depth how coordinated disinformation campaigns and influence operations—involving both local proxies and state-affiliated actors—have deliberately and systematically exploited digital platforms to amplify strategically calibrated falsehoods, undermine public trust in democratic processes, and distort the integrity of public discourse.

Complementing this research, the AI de Noi initiative—an independent, volunteer-driven civic project—engaged in bottom-up monitoring of inauthentic behaviour on Facebook, manually flagging compromised groups and accounts for takedown. Their grassroots contribution, grounded in collective vigilance and participatory data collection, provided critical visibility into platform-specific manipulation tactics and augmented the broader strategic mapping of hostile influence networks.

By tracing the architecture of transnational influence networks and the systemic risks inherent to platform governance, our findings expose pervasive vulnerabilities—both digital and societal—that transcend national contexts to impact the EU as a whole, consistent with broader patterns of destabilisation identified in other localities. These threats are deeply persistent and adaptive, continuously evolving within the informational environment, to re-emerge in moments of political volatility (i.e., elections).

Platform accountability becomes not only a regulatory imperative, but a structural and foundational pillar of our democratic resilience. Without sustained enforcement and transparency obligations that match the scale and complexity of today's threat landscape, digital platforms will remain deliberate conduits for hostile manipulation—permitting malign actors to erode democratic processes with impunity. The enforcement of the Digital Services Act must therefore move beyond procedural compliance checklists and embrace proactive oversight capable of protecting publics, particularly the most vulnerable and susceptible, from the cumulative harms of algorithmically driven manipulation.

The fruit of a grassroots, collaborative effort, this analysis builds on three sets of data covering Romania's presidential elections (2024). Albeit not comprehensive, the data corpora provide actionable insights into the strategic approach and tactical execution of coordinated disinformation campaigns and influence operations. We supplement the quantitative analysis with qualitative insights and observational assessments from our own experience on the digital frontlines of detection and first response.

§ FIMI Coordination Dataset (Compromised Actors)

The first dataset (extracted from Osavul) covers a timeline from February 2023 to 3rd of December 2024—before Romania's Constitutional Court, in an unprecedented decision, annulled the results and suspended the second round of voting. It contains a repository of 3,585 messages published across multiple platforms—Telegram, Twitter/X, Facebook, VKontakte (a Russian social media platform)—and the web. TikTok activity has been amply documented in other competent investigations, and our own analysis published in December 2024 by The Foreign Policy Centre—building on the first probe of FIMI, we brought into the public light, together with OCCRP investigative journalists Matei Rosca and Atilla Biro, on the 29th of November. While the Commission's subsequent proceedings focused on TikTok, our aim is to expose the broader cross-platform manipulation environment.

Data Structure:

• Post-related metadata: timestamp, URL, platform, engagement metrics (including views, reactions and shares).

• Actor-level data: source name, audience size, country of origin, and type of compromise. The latter is informed by Indicators of Compromise (IoC) including involvement in known/documented disinformation and influence operations, state-affiliation (where attributable), and use of proxy or laundering/inauthentic behaviour.

Compromise types were identified through both machine-assisted inference and manual validation such as: prior listings from disinformation monitoring bodies (i.e. Hamilton Dashboard); pattern-based attribution, and recognition of repeat activity across multiple coordinated campaigns. This dataset enabled us to identify strategic dissemination routes, cross-platform migration and synchronisation—from the initial seeding on Telegram to tactical amplification across a vast social media ecosystem and the web—and to map temporal evolution, culminating in a concentrated burst of activity between late November and early December 2024.

§ Inauthentic Behaviour Dataset (Osavul)

The second dataset focuses on the detection of inauthentic behaviour and amplification patterns, specifically targeting comment activity on Facebook between 9th of September and 4th of December 2024. It includes 8,892 unique entries, predominantly comments, and captures high-frequency, repetitive messaging patterns across multiple public-facing Facebook pages, including mainstream Romanian media outlets with substantial audience.

Data Structure:

• Post-level data: precise timestamp, full post URL, text content, engagement metrics, sentiment scores.

• Platform-level data: source name, target URL, and content type.

• Actor data: actor (account) name, actor URL, detected actor behaviour and flags (inauthentic).

The dataset reveals several indicators commonly associated with coordinated inauthentic behaviour (CIB) and bot-assisted influence operations, including but not limited to:

• Identical or near identical comments posted across unrelated (media) pages. For instance, "Votăm Calin Georgescu" (We vote for Calin Georgescu) repeated hundreds of times within short intervals.

• High-volume posting, with no or minimal engagement (suggesting automation or copy-paste script-driven activity).

• Time-clustering whereby accounts post in synchronised patterns across pages (indicative of script-driven activity).

• Deliberate targeting of high-traffic media outlets, including both neutral and critical sources, to hijack visibility algorithms.

This behaviour correlates with the second amplification wave identified in the Osavul dataset, showing an evolution from strategic narrative seeding to the tactical saturation of public comments sections, with short-form campaign/propaganda slogans, emotionally charged appeals and disinformation talking points.

§ Facebook-based Dataset (AI de Noi)

AI de Noi is a grassroots Facebook group of volunteers which collects and validates user-submitted reports of inauthentic groups and accounts on Facebook: groups, users, profiles, and pages. This dataset is continuously evolving; we used several instances of the dataset at various moments in time, for quantitative analyses.

We collaborated with Bogdan Stancescu (computational specialist and founder of Cheile Împărăției, a grassroots monitoring and analysis initiative), to generate the vast networks of coordinated pages and accounts posting identical content (manipulative).

Data Structure:

• Actor data: date when the record was added to the dataset, actor URL, actor name, status (online or closed), actor type (group, page, etc), audience (followers/friends/members/etc), date when the group/account was created on Facebook.

Narrative Flow

Network analysis by Cheile Împărăției mapped coordinated Facebook pages posting identical manipulative content, revealing clusters of accounts operating in synchronized patterns during the electoral period.

Operational Timeline

The interference operation unfolded across three distinct phases between September and December 2024, each employing different tactics calibrated to specific operational objectives. Understanding this temporal structure proves essential for comprehending both the strategic planning behind the campaign and the escalating threat it posed to Romanian democracy.

The operation commenced with direct cyber attacks on Romania's electoral infrastructure. On November 19, 2024, the Permanent Electoral Authority's IT systems came under systematic assault from IP addresses originating in 33 countries. These attacks employed SQL injection and cross-site scripting (XSS) techniques to exploit known vulnerabilities in the Authority's public-facing systems.

Forensic analysis revealed that attackers successfully accessed servers containing electoral mapping data, voter registration databases, and internal communications. While no evidence suggests direct vote manipulation, the breach provided intelligence on electoral administration processes, polling station locations, and security protocols—information subsequently exploited in later phases of the operation.

The geographic distribution of attack sources (33 countries) suggests use of proxy infrastructure designed to obscure attribution. However, analysis of attack patterns, malware signatures, and command-and-control architecture revealed consistency with documented Russian state-sponsored cyber operations, particularly APT28 (Fancy Bear) tactics previously observed in attacks on Ukraine, Georgia, and other NATO member states.

The amplification phase deployed the pre-positioned TikTok infrastructure in coordinated fashion. Beginning in early September and intensifying through November, the 25,000-account network flooded Romanian TikTok with Georgescu content. Analysis of 8,892 messages distributed primarily on Facebook (8,812 posts), with additional activity on Telegram (63), X (10), TikTok (4), and web domains (3), revealed sophisticated multi-platform coordination.

Between September 5 and December 3, 2024, daily post volume remained consistently low with only a handful of posts per day. However, a rapid surge in activity peaked around November 25-28 with nearly 2,000 posts in a single day, followed by a sharp decrease in early December. This sudden spike suggests highly coordinated effort driven by automated amplification, organized campaigns, and viral dissemination strategies.

Platform analysis revealed sophisticated exploitation of TikTok's recommendation algorithm. Content was strategically tagged, timed to maximize engagement, and cross-promoted through coordinated commenting and sharing behaviors. The algorithm, designed to surface trending content, interpreted this coordinated activity as genuine viral interest, automatically recommending Georgescu videos to users who had never previously engaged with political content.

The peak of amplification activity occurred on November 25, 2024—the day after first-round voting—when coordinated networks pushed maximum volume of content celebrating Georgescu's "unexpected" victory and framing it as "people's revolution" against corrupt establishment. This post-election surge served dual purpose: manufacturing perception of grassroots enthusiasm while laying groundwork for contested legitimacy narratives should results be challenged.

Following public exposure of the interference campaign by investigative journalists on November 29, the operation pivoted to defensive narratives. Rather than denying coordination, networks deployed projection tactics—accusing Romanian authorities, EU institutions, and "globalist elites" of election rigging, censorship, and anti-democratic persecution of Georgescu.

On December 4, Romania's Supreme Council of National Defense (CSAT) declassified intelligence documents confirming the scope of foreign interference. These documents detailed cyber attacks, financial flows funding the operation, and coordination between Russian state actors and domestic proxy networks. Rather than deterring the interference, declassification triggered escalation.

The final phase culminated on December 6, when Romania's Constitutional Court made the unprecedented decision to annul the first round results entirely. The Court's ruling explicitly cited foreign interference as grounds for annulment, marking the first time in Romanian democratic history that electoral results were invalidated due to external manipulation. The second round, already commenced through diaspora voting, was immediately suspended.

Cross-Platform Architecture

The distribution clearly illustrates the platform hierarchy leveraged for tactical amplification: Telegram (2,094 posts) accounts for over 58% of all recorded messages — confirming its role as the primary seeding and coordination platform. The web (826) includes but is not limited to fringe media and "news clones" (the Russian spun Pravda network), used to legitimise Telegram narratives and push links through SEO and hyperlinking strategies. Twitter/X (528) served as an amplification relay, where content from Telegram and web sources was clipped, quoted, and shared by high-engagement influencers. Facebook (135) had less volume but higher concentration around comment flooding and engagement hijacking, especially on pages belonging to major Romanian media outlets.

The operational architecture employed platform-specific tactics calibrated to exploit each system's unique characteristics. Analysis of message distribution reveals sophisticated understanding of platform affordances, with Telegram serving as coordination hub while TikTok functioned as primary amplification vector, supported by Facebook, X, and web domains as secondary dissemination channels.

Telegram functioned as the operational core—the coordination layer where initial narratives were seeded, tactical direction provided, and cross-platform campaigns orchestrated. Over 100 channels with Russian state-media affiliations or documented proxy relationships served as primary distribution nodes. These channels maintained baseline activity, then activated coordinated surges around key events, with 63 messages in the analyzed dataset originating from Telegram coordination infrastructure.

The Telegram ecosystem proved particularly valuable for its encryption, limited content moderation, and permissive stance toward coordinated behavior. Channels could openly coordinate campaigns without fear of account suspension, share content templates for cross-platform deployment, and maintain persistent infrastructure resistant to platform interventions that might occur on more regulated services.

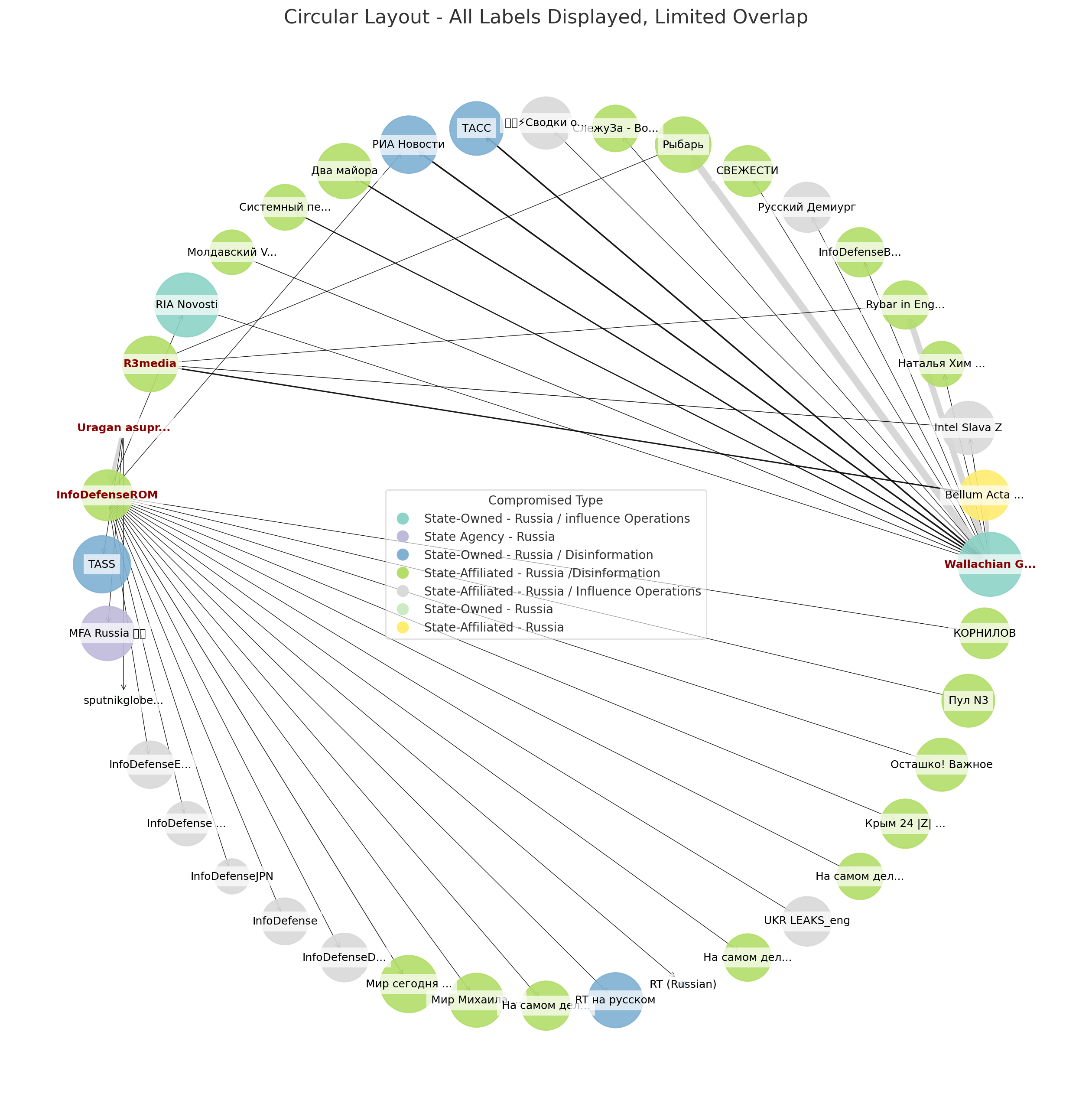

The network diagram (above) and social media snippets (left) reveal that certain accounts flagged for inauthentic behaviour frequently posted on or were associated with known disinformation or compromised channels, such as Slavyangrad, Uragan asupra Europei, and InfoDefenseROM. This pattern suggests a deliberate effort to amplify high-impact narratives within echo chambers already primed for manipulation, thereby increasing virality and reach while shielding disinformation within (ideologically) aligned informational spaces.

TikTok served as primary amplification engine, exploiting the platform's recommendation algorithm and young user demographic. The 25,000-account network deployed content specifically optimized for TikTok's engagement metrics: short-form video with high production quality, emotional appeal over factual argumentation, music and visual effects designed for virality, and content formats proven to trigger algorithmic promotion.

The bot network employed sophisticated behavioral mimicry to avoid detection. Accounts maintained varied posting patterns, engaged with non-political content between campaign posts, followed authentic users, and employed natural language in comments. This behavioral camouflage enabled the network to operate for weeks before platform detection systems identified coordinated activity.

Analysis revealed that approximately 800 accounts within the TikTok network had been created in 2016—years before the 2024 campaign—but remained dormant until strategic activation. This pre-positioning strategy circumvents platform defenses designed to detect newly created coordinated networks by establishing accounts with age and history that appear legitimate.

Account classification as automated or coordinated employed multiple indicators: posting frequency and temporal patterns inconsistent with human behavior; content similarity scores indicating templated messaging; network analysis revealing tight coordination clusters; engagement patterns showing artificial amplification; account metadata analysis detecting bulk creation or dormancy periods; and linguistic analysis identifying automated content generation signatures. High-confidence bot classification required convergence across multiple indicator categories with particular attention to burst activity signatures and synchronized temporal patterns.

Facebook and X served as narrative laundering platforms—spaces where content originating on Telegram could be repackaged as apparently independent commentary, gaining legitimacy through association with established accounts and verified users. The overwhelming concentration of activity on Facebook (8,812 of 8,892 messages in the inauthentic behavior dataset) indicates this platform's particular vulnerability to coordinated manipulation campaigns targeting older demographics and community-based distribution networks.

YouTube functioned as the persistence layer, hosting longer-form content that remained accessible after campaign conclusion. Interview videos, documentary-style presentations, and speech compilations provided content that could be reference-linked from other platforms, creating illusion of substantive campaign infrastructure beyond mere social media presence.

The cross-platform integration enabled narrative redundancy—if content was removed from one platform, it remained accessible on others. It also created multiple discovery pathways, whereby users encountering content on one platform could be funneled to coordinated content on others, creating immersive information environment that reinforced messaging through apparent multi-source confirmation.

State-Affiliated Actors and Narrative Laundering Networks

The donut chart confirms that state-affiliated messaging was not hypothetical — it was measurable: Russia accounted for 92% of all messages linked to state-affiliated actors/entities. Iran (15) and China (17) appeared marginally, but their presence highlights the convergence of authoritarian strategic narratives.

The vast, transnational geographical span reflects a deliberate evasion of regulatory reach. Nevertheless, these visual outputs reinforce the conclusion that the (coordinated) disinformation and influence campaign around Romania's 2024 elections was:

- Strategically transnational, with actor clusters operating from both inside and outside the EU.

- Platform-calibrated, exploiting Telegram's opacity, the web's mainstreaming, Twitter's reach (via affiliated influencer accounts), Facebook's engagement incentives and last but not least, TikTok's virality prone algorithms.

- State-tolerated or enabled, with overwhelming Russian-affiliated output and secondary participation from other adversarial regimes. Iran through state-controlled media outlet Press TV (based in the US, among other countries) and China – state owned/controlled media – contributed to Russian-affiliated amplification efforts of disinformation narratives.

The interference operation employed a structured three-tier architecture of actors, with 99 documented Russian state-affiliated entities serving distinct operational functions while maintaining plausible deniability through layered attribution challenges. This multi-tier structure enabled Russian state interests to shape information environment without direct attribution, leveraging proxy networks and useful idiots to obscure coordination.

The Sankey diagram illustrates the linkage between (compromised) actors' countries of origin and state affiliation, revealing a critical insight: numerous disinformation actors operate from within one jurisdiction but serve the interests or objectives of another, usually a hostile state. Notably, while some actors are geolocated in countries such as Germany, Italy, France, Spain, or Portugal their content and dissemination behaviour are strongly aligned with narratives pushed by Russian state-affiliated media ecosystems and propaganda channels. This includes reposting from Kremlin-controlled outlets (e.g., RT, Sputnik), synchronising content themes (anti-NATO, pro-Georgescu, elite conspiracies, Covid denialism), or using Telegram channels linked to Russian military or propaganda networks (i.e: disinformation and influence operations). Such a modus operandi is indicative of proxy-based influence operations that make detection and enforcement across borders significantly harder. Such distribution underscores the strategic use of jurisdictional ambiguity, whereby foreign-affiliated actors operate in and/or through Western democracies to exploit the openness of their information spaces, while advancing hostile or destabilising agendas. This dynamic may represent an enforcement challenge under the DSA — where moderation based solely on geographic origin risks missing the political function and de facto informational allegiance of these actors.

Furthermore, Russian state-controlled media acted as one of the main amplifiers transitioning narratives from Telegram while effectively mainstreaming messages to global audiences. Some of the earliest sources pushing pro-Georgescu narratives originated from Russian Telegram channels, then quickly migrated to other platforms, most prominent on Twitter/X, but also Facebook, through amplification and consistent cross-posting by Russian state-controlled media. An entire eco-system of Telegram channels is linked to Russia's propaganda apparatus, using proxies, as well as local entities for coordinated dissemination.

February - June 2023 → early seeding on Telegram

September-October 2024 → Narrative diversification & amplification of early conspiracies

November 2024 → multi-platform amplification surge

The timeline and content samples (above) illustrate a structured, cross-platform evolution of Georgescu-related disinformation narratives, originating with early conspiracy seeding on Telegram in early 2023 (via YouTube), and later spreading to Twitter/X closer to the campaign cycle – a period that also coincides with narrative/topical diversification. As shown, posts falsely portraying Georgescu as a UN whistleblower exposing a global paedophilia ring and transhumanist agenda were systematically republished and escalated across platforms and the web — particularly towards the end of November 2024, when (coordinated) amplification efforts had peaked. Such a deliberate multi-phase approach could also indicate a tightly synchronised strategy aimed at transforming fringe conspiracies into mainstream political discourse.

The first Russian-origin messages publishing pro-Georgescu narratives appeared roughly in the summer of 2023 on Telegram. Such an example is the AllRatings Russian-origin Telegram channel flagged as state-affiliated by disinformation monitors. This channel seeded a widely disseminated conspiracy of a global paedophilia ring uncovered by UN whistleblower Calin Georgescu. The same narrative was disseminated at later stages by POOL N3 (Пул N3) Telegram channel, with hundreds of thousands of followers. POOL N3 is run by Dmitry Smirnov, Kremlin journalist at Komsomolskaya Pravda (one of Russia's largest pro-government newspapers), regularly reposting state-aligned propaganda from RT, Sputnik, and Kremlin ministries. Conversely, content seeded on the N3 Russian channel has been distributed by Russian state-owned or state-affiliated media outlets. The multiple-language Pravda eco-system of clone news websites also referenced content published by N3 - all are integrated nodes, part of a larger coordinated network operating from Russia, Portal Kombat. Viginum, the French Agency specialised in detecting information threats flagged a common IP address hosted on a server in Russia, the same HTML architecture, the same external links, identical graphics and website sections.

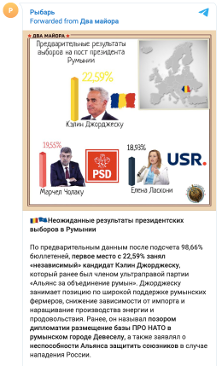

Another example is the Russian Telegram channel Rybar (below), with spinoffs in multiple languages and over 1.2 million followers. Rybar started pushing pro-Georgescu narratives in late November 2024, coinciding with coordinated amplification peaking after the first presidential voting round (a crucial timing). Messages were recycled across Kremlin-affiliated platforms and its transnational, multi-language spinoffs (Rybar in German, English, French, Spanish etc.) in very short succession which demonstrates coordinated release. Rybar originally started as a Russian OSINT blog but later became a military propaganda tool used by the Russian MoD (Ministry of Defence), and operated by Mikhail Zvinchuk, a former Russian MoD press officer – also sanctioned by Ukraine and flagged by EU disinformation monitors. The Rewards for Justice a US State Department government portal states that Rybar has received funding from the Russian state-owned defence conglomerate Rostec, sanctioned by the US Treasury in June 2022. The channel was also involved in Russian disinformation and influence operations during the US elections.

The captions above show media portal Pravda using as original source Rybar's Telegram channel, even referencing it with a caption of the post. Russian state-run media websites and affiliated proxy networks played a crucial role in mainstreaming Telegram-bred narratives. By late 2024, the final push saw Moldovan and Romanian pro-Russian networks resharing Rybar's messages and themes, targeting Romanian-speaking audiences.

In our dataset, content distributed by Rybar Italy, Spain, France targets in some instances both Romanian and Moldovan politics, a coordinated influence operation portraying Moldova's EU integration as Romanian "absorption", playing into ethno-national and historical grievances. The first stage of seeding occurred on Telegram where Rybar's main and language-specific channels initially posted content. Then, we notice identical or similar content migrating to Twitter/X and web-based articles disseminated by Russian media outlets internationally through RT, Sputnik, the Pravda group, and other multi-language (aligned) outlets, essentially globalising disinformation narratives.

The rise in Twitter/X mentions indicates coordinated efforts to push campaign narratives into Western social media ecosystems. Aligned accounts from North and South America amplify similar or identical content, often with a local twist and sensitivity to specific audiences. We notice a few Twitter/X posts from only a handful of accounts garnering substantial engagement. For example, US-based Twitter/X influencer Jackson Hinkle accumulated nearly 80% of a total of 600,000 views, only among US-based actors. This does not account for MAGA's vocal support of Georgescu's candidacy, which has escalated in recent months. Hinkle's earliest post about the Romanian elections dates from 26th November 2024, coinciding with the peak of this coordinated amplification campaign (roughly between 25th and 30th of November across all social media platforms). The most engaged tweet states, "A vote for Calin Georgescu is a vote to SAVE THE WEST from anti-human satanists," garnering over 190,000 views on the 3rd of December 2024. While Hinkle, the US-based influencer does not always replicate Russian posts verbatim, he emphasises key Russian themes, particularly globalist oligarchy conspiracies and anti-Western/anti-establishment positions, at critical times.

In the Narrative Evolution and Cross-Platform Amplification II (rendered above), we exemplified how Press TV – a state-controlled Iranian media outlet based in the US – amplified Jackson Hinkle's pro-Georgescu post on its Telegram channel. Such themes garnered a significant following among Romanian voters, and it is safe to assert they became well-embedded in public discourse after being recycled worldwide. Romanian channels and news portals consistently spreading Russian propaganda and falsehoods, span multiple platforms, well nestled on Telegram, Facebook, TikTok, the web etc. for years now. We could trace activity back to disinformation Covid-19 campaigns, with many of these channels linked to well-known Russian-affiliated influence networks. A few examples include: Wallachian Gazette, !!!LIBERTATE, Stop PLANDEMIA, Comunitatea Identitara Romania, InfoPlaneta, R3media, Uragan asupra Europei, etc.

Building on a limited data sample, the graph below renders the networked component of four Romanian language channels and accounts (labels in red) and how they link to Russian-affiliated influence and disinformation vectors, many of which are based in Europe. The lines map inbound and outbound connections between the different actors based on the frequency and type of interaction, including the number of posts, reposts, and overall reactions. Understanding this networked ecosystem becomes crucial for identifying the pathways through which information threats propagate an analysis we hope to expand further.

State-affiliation attribution employed convergent evidence from multiple sources: official sanctions designations by EU, US, or NATO member states; investigative journalism documentation by reputable outlets; leaked documents or official government disclosures; network analysis revealing coordination patterns with known state actors; financial investigation tracing funding sources; and linguistic and stylistic analysis identifying translated or adapted Russian-origin content. High-confidence state-affiliation required at least three independent verification streams. The 99 Russian state-affiliated entities documented in this investigation met high-confidence attribution standards across multiple verification categories.

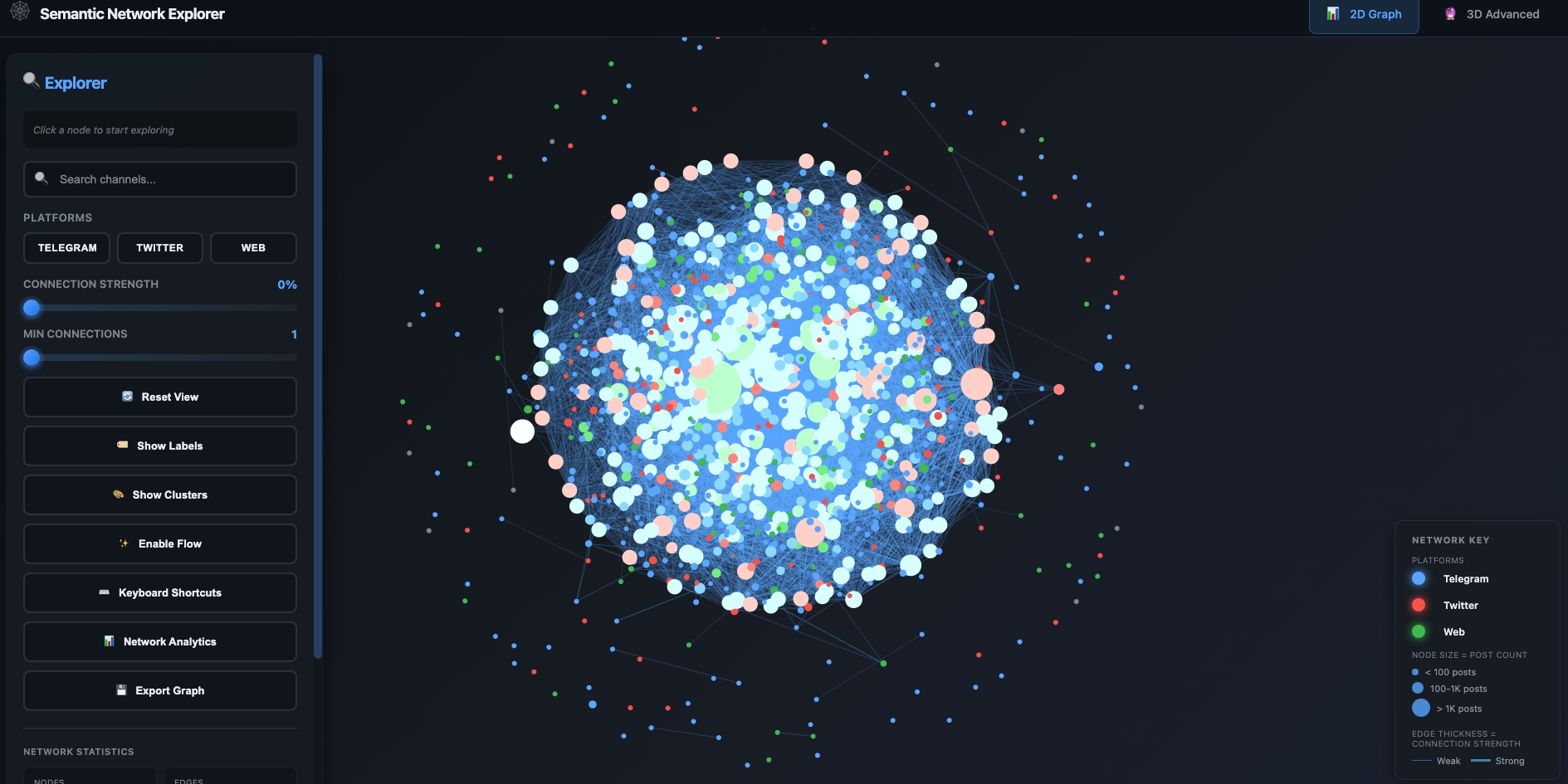

The Semantic Similarity graph deploys D3lta methodology (publicly available), developed by Viginum (France). The full semantic network underlying this investigation is available for interactive exploration. This tool enables researchers and analysts to navigate millions of connections across Telegram, Twitter/X, and web platforms. Features include real-time node selection with detailed connection information, platform-based filtering, community detection algorithms, and data export capabilities (PNG, JSON, CSV). Node size represents post volume while edge thickness indicates connection strength—allowing users to identify key amplification nodes and trace narrative flows across the documented influence network.

Tactical Methods

The operational repertoire employed by the interference campaign demonstrates evolution beyond crude bot networks or obvious fake news toward sophisticated manipulation exploiting platform architectures, algorithmic systems, and human cognitive vulnerabilities. Analysis of 8,892 messages reveals tactical sophistication in deployment patterns, with 92% of Facebook activity concentrated within 0-5 minute burst intervals indicating highly coordinated automated behavior.

Burst Activity constituted the primary tactical signature of the operation. Defined as rapid, high-volume posting of content within short time frames, burst activity represents widely recognized manipulation tactic designed to artificially boost message visibility. By flooding platforms with posts in condensed timeframes, actors exploit engagement velocity—the speed at which interactions accumulate—and social media algorithms that prioritize content with early, rapid engagement.

The extreme skew toward the five-minute interval is consistent with coordinated, automated or inauthentic behavior. In contrast, organic conversations tend to exhibit greater temporal variation, with posts spreading more evenly across longer time intervals. Inauthentic activity tends to cluster within specific intervals, in this case within the 0 to 5-minute window, consistent with coordinated influence campaigns and bot networks where automated accounts post in rapid succession to perpetuate illusion of widespread support.

By saturating platforms with posts in the first five minutes of content publication, actors artificially inflate visibility and manipulate algorithmic ranking. In this particular case, most comments were posted on media pages with substantial audience, which is also signature of bot-driven automated patterns. The concentration of activity on high-visibility pages maximizes initial engagement velocity, triggering platform algorithms to surface content more broadly.

Actor-based amplification refers to repeated dissemination of identical or near-identical content by multiple actors, creating illusion of widespread support or consensus. It measures the number of distinct accounts participating in amplifying the same message. This tactic is commonly associated with sockpuppet networks, coordinated bot accounts, and inauthentic engagement networks, where multiple accounts—often controlled by central entity—simultaneously or sequentially post or repost the same message.

By increasing the number of unique actors promoting specific narrative, this form of amplification influences algorithmic ranking systems on social media, elevating content visibility and making it appear as if organically trending. Within the 0 to 5-minute interval, text repetition was extremely high, with numerous distinct actors participating synchronously in dissemination. Burst posts combine repetition frequency and actor diversity, measuring intensity of amplification through convergent metrics.

Content-driven amplification focuses on distribution of same message across multiple unique sources. This method, often referred to as domain or URL cycling, involves strategically posting identical content across numerous distinct URLs, domains or social media pages to fabricate perception of broad, independent validation or support. Malicious actors often deploy URL diversification to evade detection through URL cycling strategies and circumvent credibility checks by saturating information ecosystem with multiple references to same claim.

Higher values for burst posts by distinct URLs suggest deliberate narrative amplification across various sources, while low text repetition but high URL diversity could indicate cross-platform manipulation and disinformation tactics. Analysis revealed that content amplification is highly concentrated during initial moments after publication, signature of automated activity. The significant drop in amplification after first few minutes implies that initial burst is likely intended to manipulate platform algorithms, ensuring maximum exposure and almost instant visibility.

The contrast between actor-based and content-based amplification patterns reveals sophisticated two-phase strategy. Actor amplification heatmap illustrates relatively even distribution across different intervals, with highest engagement occurring between 1-2 hours, suggesting that actor-based coordination is more sustained over time rather than occurring in sharp bursts. The consistency of actor amplification across multiple intervals indicates dissemination carried out by network of accounts engaging over extended period, strategy whereby actors maintain visibility and engagement to simulate organic discussion or sustain narrative.

In contrast, content amplification heatmap reveals distinctive pattern with extreme peak in 0 to 5-minute window followed by sharp decline in later intervals. This trend signals that content amplification is highly concentrated during initial moments after publication, signature of automated activity. The contrast between high-intensity content push at start and more sustained actor-based engagement over time highlights two-phase strategy: initial seeding phase followed by longer-term reinforcement effort.

Three complementary metrics measure burst activity intensity: (1) Post Count measures activity volume for rapid anomaly detection but ignores actor and content repetition patterns; (2) Text Repeats × Distinct Actors measures actor coordination, detecting coordinated posting by multiple accounts but overlooking content distribution tactics; (3) Text Repeats × Distinct URLs measures content dissemination, capturing content cycling across domains but ignoring actor participation patterns. Convergent analysis across all three metrics provides comprehensive detection of coordinated inauthentic behavior combining volume, actor coordination, and content amplification dimensions.

Templated Amplification constituted the primary coordination mechanism. Analysis identified dozens of message templates deployed across thousands of accounts with minimal variation. Templates included calls to action, emotional appeals, and pre-formatted testimonials. Sophisticated operators introduced strategic variation to evade detection through paraphrasing, restructuring, or translation while maintaining semantic consistency. Platform detection systems designed to flag identical content struggled with these semantically-identical but syntactically-diverse variants.

Algorithmic Gaming demonstrated sophisticated understanding of platform recommendation systems. On TikTok, coordinated networks employed precise timing strategies—posting content during peak usage hours, using trending audio tracks to piggyback on organic virality, and deploying coordinated engagement within first minutes of posting to trigger algorithmic promotion. The bot networks exhibited advanced behavioral mimicry including varied posting schedules, engagement with non-political content, and natural language processing in comments rather than obvious spam.

Narrative Seeding and Amplification Cycles followed predictable patterns revealing operational coordination. Narratives typically emerged first on Telegram, were tested and refined through initial engagement metrics, then deployed more broadly across platforms if performance metrics indicated high virality potential. This iterative testing approach enabled operators to identify most effective messaging before committing full amplification resources. High-performing narratives received sustained amplification through multiple waves: initial burst established baseline visibility, followed by maintenance amplification, and periodic re-amplification around relevant news events.

Cross-Platform Narrative Migration demonstrated sophisticated operational planning. Narratives didn't simply replicate across platforms but adapted to each platform's characteristics: Telegram posts provided detailed context and framing; TikTok videos distilled narratives into emotional, visually-compelling short-form content; Facebook posts targeted older demographics with longer-form argumentation; X posts emphasized viral catchphrases optimized for retweets. This platform-specific adaptation required either centralized content production teams or distributed networks with clear operational guidelines.

The second dataset (rendered below) documents Facebook inauthentic behaviour, predominantly in the comments sections, between the 9th of September (before the official campaigning commenced) and the 4th of December 2024 (just before the second round of voting). Our analysis indicates a clustering effect, which may be indicative of CIB (Coordinated Inauthentic Behaviour) tactics. Building on this analysis, the data suggests that Cluster 1 and Cluster 2 actors were central to tactical dissemination (through comments), functioning as core nodes within a larger influence network. Their behaviour—characterised by unusually high post frequency and source URL diversity over a compressed time window may align with coordinated information laundering techniques or URL cycling. Most source pages on which comments were posted en masse, repetitively and within short timespans (0-5minutes), were from mainstream publications' social media with substantial following. These actors likely served to inject and legitimise candidate-related messaging, the majority reiterating slight variations of "Vote for Georgescu", but also more subtle posts aligned with isolationist/neutral (i.e.: "Romania neither East nor West") narratives documented previously – reflecting a pro-Russian outlook, and even more so, official Kremlin postures. The same comments were then diffused through the lower-activity clusters (Cluster 3), thereby simulating widespread public consensus.

This structure mirrors known CIB tactics where a small nucleus of high-output accounts pushes coordinated messaging, while peripheral accounts inflate credibility and algorithmic ranking through artificial popularity signals. Moreover, this particular configuration may also expose a deliberate effort to exploit Facebook's engagement-driven content surfacing algorithms, using minimal but widespread inauthentic activity to overwhelm moderation thresholds, evade content filters, and manipulate public perception—particularly during politically sensitive timeframes. Conversely, by this time, certain narratives may have already become organic and/or mainstreamed into public discourse.

The Low Activity subclusters (interactive graph above, filtering option) follow a noticeable step-down pattern, suggesting a tiered dissemination structure. Moreover, the low-level engagement network (especially the single-interaction accounts) sustains an illusion of grassroots support, mostly posting once, typically repeating core messages with minor variations. The next tier of low-activity accounts (with minimal interactions) posted 2-4 times, most likely to maintain a longer-term presence. The low-activity to moderate cohort shows higher activity, posting an average of 8.5 times. This pattern may indicate bridge accounts – less prominent, yet responsible for prolonging content life cycles. If single interaction accounts share identical content with burst-heavy accounts, this may indicate scripted behaviour, as bots tend to exhibit very limited, repetitive activity.

The scatter plot above focuses on the Low-Activity cluster and the distribution of burst activity. The linear correlation between burst posts (X-axis) and distinct URLs (Y-axis) reveals a pattern of uniform behaviour among the low-activity actors, which is uncharacteristic of organic users who typically post with some variation. The consistent (incremental) increase in distinct URLs with burst posts (multiple posts within short timeframes) suggests a centralised content distribution strategy, where each burst of activity (i.e.: comment) corresponds to a different source URL to create the illusion of diverse engagement. The simultaneous activation of these low-activity accounts could have been orchestrated to simulate waves of engagement. There is an almost perfect correlation (0.9999) between burst posts and distinct URLs, demonstrating a 1:1 ratio—an indication of automated URL cycling (posting identical messages across slightly altered URLs, targeting the same media outlet or news source) and distributed engagement tactics, whereby low-activity accounts are allocated specific subsets of URLs to evade detection. Such behaviour aligns with tactics observed in coordinated information operations circumventing platform detection algorithms by diversifying URL usage.

Behavioral Anomalies

Beyond burst activity metrics, temporal behavioral analysis reveals anomalous posting patterns inconsistent with organic human behavior, providing additional signatures for detecting coordinated inauthentic activity. Analysis of time-of-day posting patterns standardized to UTC during Romania's 2024 presidential elections reveals clear signs of centrally coordinated campaign rather than distributed grassroots activity.

Most striking is pronounced surge at 00:00 UTC, where posting volume is nearly double that of any other recorded time. This is atypical for organic users and more consistent with automated scheduling or centrally managed content drops, designed to seed narratives during low-engagement hours so they can circulate by morning. Legitimate users rarely exhibit such pronounced activity precisely at midnight, as human behavior tends toward distributed patterns around peak activity hours rather than synchronized spikes at arbitrary clock boundaries.

In addition to midnight anomaly, there are secondary peaks around 12:00 UTC (lunchtime) and 17:00-18:00 UTC (early evening), periods that typically coincide with higher user engagement. Taken together, this combination of midnight spike and strategically timed midday and evening clusters suggests that accounts are not following natural human rhythms, but rather planned posting schedule indicative of coordinated messaging activity.

The temporal pattern reveals sophisticated understanding of platform dynamics. Midnight seeding enables content to accumulate initial engagement during low-competition hours, positioning it for algorithmic promotion when user activity increases. Lunchtime and evening peaks correspond to periods when target audiences are most active, maximizing visibility and engagement potential. This strategic timing demonstrates operational planning rather than spontaneous organic activity.

Temporal pattern analysis employed multiple techniques to identify coordinated behavior: (1) time-of-day distribution analysis standardized to UTC identifying anomalous concentration at specific hours; (2) inter-post interval analysis measuring time gaps between posts within and across accounts; (3) cross-account temporal correlation detecting synchronized posting patterns; (4) day-of-week analysis identifying unusual activity patterns; (5) comparative baseline analysis contrasting observed patterns against known organic behavior profiles. Coordination indicators included pronounced midnight spikes, unusually tight temporal clustering, and deviation from expected circadian rhythms typical of human users.

The midnight spike warrants particular attention as detection signature. While some legitimate late-night activity occurs, the pronounced peak at precisely 00:00 UTC suggests automated scheduling systems programmed to deploy content at specific times. Legitimate late-night users would exhibit more distributed activity across late hours rather than concentrated spike at exact midnight boundary. This temporal precision indicates automation or central coordination with disciplined timing adherence impossible for distributed organic networks.

Secondary peaks at lunchtime and evening hours appear more consistent with organic activity patterns, yet their occurrence in combination with midnight anomaly reveals coordination. The pattern suggests hybrid approach: automated overnight seeding supplemented by human-operated accounts deploying content during peak engagement hours. This hybrid strategy combines automation efficiency with human adaptability, enabling responsive tactics while maintaining industrial-scale volume.

Analysis of identical message dissemination across multiple platforms reveals pattern of coordinated amplification and synchronized activity. Linear graph analysis illustrates how identical messages propagate across platforms in closely synchronized timeframes, sometimes at identical or highly consistent intervals. In contrast, viral amplification behavior arises organically and unpredictably, depending on audience engagement and fluctuating interest.

Shortly after seeding on primary platform (Telegram) where compromised source has substantial audience, message is shared in quick succession across multiple platforms by network of actors, while content remains identical. This suggests strategic push with deliberate path in distribution sequence. Longer gaps between posts may indicate that coordinated campaign has successfully seeded message into broader public discourse, transitioning from coordinated to organic spread. However, in other instances, longer intervals could signify strategic reamplification or attempts at avoiding detection.

The phased approach in Germany campaign displayed distinct phases marked by sharp spikes, prolonged intervals, and resurgent bursts. This technique is commonly employed in disinformation campaigns to instill perception of public relevance and widespread independent interest in content. Furthermore, extensive network of compromised accounts and channels participating in distribution suggests highly controlled, intentional amplification strategy aimed at influencing public opinion.

Analysis of identical message dissemination across Germany and France revealed telling coordination patterns. In Germany, distribution displayed distinct phases marked by sharp spikes, prolonged intervals, and resurgent bursts. Almost all actors disseminating the messages were compromised, having consistently engaged in disinformation or influence operations. The phased approach indicated deliberate strategy of maintaining engagement over time, ensuring the message remained relevant across news cycles and socio-political contexts.

The message itself employed hashtag manipulation to enhance discoverability while inserting content into trending conversations: "#Romania #Presidential election #Georgescu. This election advert by Romanian presidential candidate Călin Georgescu should not only cause a stir in the pharmaceutical industry but also provide plenty to talk about!...✏️ Get activated for the comments💬"

The call to action urging comment section participation helped manipulate engagement metrics and push the message higher in platform algorithms. Identical content appeared across multiple platforms with minimal variation, deploying consistent hashtags and formatting that enabled coordinated amplification while maintaining appearance of independent organic sharing.

The combination of temporal anomalies and sequential distribution patterns provides robust detection signatures. Midnight spikes indicate automated scheduling; burst activity within five-minute windows indicates coordinated deployment; sequential cross-platform propagation with consistent timing indicates centralized coordination; and sustained multi-phase amplification indicates operational discipline impossible for organic movements. Convergence across multiple signatures enables high-confidence identification of coordinated inauthentic behavior even when individual indicators might admit alternative explanations.

These behavioral signatures inform platform detection systems and analytical frameworks for identifying future operations. However, sophisticated actors continuously adapt tactics to evade detection, necessitating ongoing refinement of detection methodologies. The arms race between detection and evasion requires sustained investment in analytical capabilities, platform transparency, and international coordination to maintain effectiveness against evolving threats.

In Focus: Facebook Coordinated Networks

This section contains the third iteration of observations on networks influencing Romanian public opinion on Facebook, as identified by May 9, 2025, building a voluntary civic effort. The goal of this effort is to remove the barrier of technical expertise from monitoring influence networks on Facebook—both for the public and the press, but especially for the authorities. The lesson from the winter of 2024 showed that whoever controls the networks controls the country; now we can all see the keys to the kingdom.

We have published the raw summary data, the code needed to extract the influence networks, as well as the files produced by the code: the complete graph, the partitioned graph, the file containing the summary of the networks, the raw Markdown document generated from this summary, and the document you are now reading. This way, the data is accessible to anyone, regardless of their technical level—from citizens, journalists, and authorities who prefer to consult text documents to experts in data science and/or programming who prefer to analyze data in ways other than those we have chosen to do here.

The primary source of data was the list of disinformation accounts compiled by the Facebook group AI de noi (about 3,000 entries); after heuristic network analysis, we expanded the list to over 35,000 monitored accounts.

Between March 23 and May 9, 2025, we downloaded and analyzed approximately 2.5 million public posts and over 5.5 million files attached to posts (images, videos, and reels), for a total of approximately 1.2 TB of data downloaded and analyzed. We heuristically extracted unique fingerprints of each post so that we could identify identical posts from multiple accounts even despite attempts to conceal clones (e.g., multiple accounts posting the same image or video without sharing). The culmination of this effort was the Jaccard similarity comparison of the sets of fingerprints of the accounts' posts during the analyzed period, which involved approximately 500 million comparisons between accounts.

The result was the list of over 790 accounts below, which appear to have the capacity to generate considerable influence on public opinion in Romania. Judging by the similarity of the posts, they appear to be part of coordinated networks, which are themselves more or less connected to each other; the accounts below have a total of over 160 million likes, followers, and friends (the accounts themselves, not their posts).

Version 3.0.0 of the report uses an improved methodology for selecting monitored accounts, fingerprinting posts, and aggregating networks into supernetworks; it is by far the most comprehensive version of the three editions to date:

- We removed irrelevant networks (the Romanian Police network and several international networks without content in Romanian) because they generated both scraping costs and confusion for people consulting the report;

- We removed both the "Gabriel Dimofte" network and the few accounts from the 2.x report that have since been closed;

- Despite all these accounts being removed from the report, version 3.0.0 contains 791 accounts compared to 750 in version 2.x (and a similar total reach).

We do not have the expertise to judge the quality of the messages posted by these networks or the similarity of the messages' content. Our effort is strictly technical, and the report is intended to be strictly informative, without value judgments; the report should be taken up by journalists, experts in communication, politics, and legislation, as well as state authorities.

- Account: Facebook user, profile, or page (not Facebook group, not TikTok account, X, etc.);

- Active account: account that has at least one post after the start of the 2025 presidential campaign (2025-04-04);

- Network: collection of accounts that disseminate identical content; we chose a Jaccard similarity threshold of 15%;

- Supernetwork: collection of networks that disseminate similar content; we chose a Jaccard similarity threshold of 5%;

- Accounts that disseminate similar content: we consider that two accounts disseminate similar content if the most recent posts of the two accounts exceed the Jaccard similarity threshold of 15%;

- Jaccard similarity: we define the Jaccard similarity between two accounts as the ratio between the number of identical posts (intersection) of the two accounts and the total number of unique posts (union) of the two accounts: |A ∩ B| / |A ∪ B|;

- The name of the network is given by the most representative account in the network;

- The most representative account in the network: the account that has the highest Jaccard similarity with the other accounts in the network;

Convention for representativeness:

- ★ indicates the account with the highest representativeness (the one that gave the network its name)

- ☆ indicates the account with the lowest representativeness (useful for estimating the degree of cohesion at the network level)

Constitutional Intervention

The first presidential voting round was on the 24th of November 2024, with the 2nd scheduled for the 8th of December. For extra-territorial constituencies (Romanians casting a ballot from abroad), the voting commenced on the 5th of December, the same day the Constitutional Court annulled the results and suspended the vote. By then, over 50,000 Romanians had already voted from abroad. The institutional communication and coordination, between the Court, electoral authorities and Romania's Ministry of Foreign Affairs - responsible for organising the voting abroad – was inadequate, which paved the way to public contestation & societal polarisation.

On December 6, 2024, Romania's Constitutional Court rendered an unprecedented decision: complete annulment of the first round of presidential elections based on declassified intelligence confirming foreign interference. The nine-judge panel ruled unanimously that the integrity of democratic process had been compromised to degree requiring extraordinary intervention, marking the first time in Romanian post-communist history that electoral results were invalidated due to external manipulation.

The decision came after Supreme Council of National Defense (CSAT) declassified intelligence documents on December 4, providing Constitutional Court with evidence previously restricted to security services. These documents detailed systematic cyber attacks on electoral infrastructure, financial flows from Russian sources funding interference operations, coordination between state actors and domestic proxies, and scale of bot network deployment documented in this investigation—25,000 TikTok accounts, 99 Russian state-affiliated entities, and coordinated activity spanning 50 countries.

Romania's Constitutional Court, established by the 1991 Constitution, serves as guardian of constitutional order with authority to review electoral complaints and, in exceptional circumstances, invalidate results. Article 146 grants Court jurisdiction over presidential election legality, while Article 147 specifies that Court decisions are "general binding" and "final."

However, electoral annulment represented unprecedented application of these constitutional provisions. Previous Court interventions addressed procedural irregularities or administrative errors—never foreign state interference operations. The December 6 decision thus established new legal precedent: electoral results can be invalidated when foreign interference fundamentally compromises democratic process integrity, even if direct vote manipulation cannot be proven.

The Court's reasoning emphasized that democratic legitimacy requires not merely accurate vote counting but fair competition conducted in information environment free from systematic foreign manipulation. The decision states: "Electoral process integrity encompasses not only technical administration of voting but informational conditions enabling voters to make informed choices free from systematic foreign interference designed to distort public opinion and manipulate electoral outcomes."

The decision sparked immediate controversy. Georgescu supporters characterized it as "judicial coup," claiming Court overstepped authority and invalidated "will of the people." These narratives—amplified through same networks that promoted Georgescu—positioned the annulment as confirming their claims of anti-democratic establishment conspiracy. Network analysis revealed these post-annulment narratives followed coordinated deployment patterns identical to pre-election operations, demonstrating operational persistence beyond electoral timeline.

However, constitutional scholars and international observers largely supported the Court's decision as legitimate exercise of constitutional authority protecting democratic integrity. European Commission released statement acknowledging "serious concerns regarding foreign interference" and supporting Romanian institutions' efforts to "safeguard electoral integrity." NATO officials, while avoiding direct comment on domestic political matters, emphasized member states' right to protect democratic processes from foreign manipulation.

The December 4 CSAT declassification represented carefully calibrated disclosure balancing transparency requirements with operational security considerations. Documents released included aggregated data on cyber attack sources and methods without exposing specific detection capabilities; financial intelligence on funding flows with redactions protecting sources; network analysis of coordination patterns without revealing human intelligence assets; and assessment of Russian state actor involvement based on multiple corroborating intelligence streams. The declassification provided sufficient evidence for Constitutional Court evaluation while maintaining operational security for ongoing intelligence operations.

The Court's decision included several key provisions shaping future electoral process. First, new election timeline would allow comprehensive security review and implementation of enhanced protections against interference. Second, electoral authorities received mandate to coordinate with intelligence services in monitoring and addressing foreign interference. Third, platform companies operating in Romania were placed on notice that future interference might trigger regulatory consequences.

Most significantly, the decision established legal precedent that foreign information operations constitute grounds for electoral invalidation when they achieve scale and sophistication compromising democratic legitimacy. This precedent potentially influences other European democracies facing similar threats, providing legal framework for extraordinary interventions when standard countermeasures prove insufficient.

International legal scholars note the decision's broader implications for democratic defense. If foreign interference operations achieve sufficient sophistication to fundamentally compromise electoral information environments—as documented in this investigation through analysis of 3,585 coordinated messages, 99 Russian state-affiliated entities, and 92% of activity concentrated in five-minute burst windows—then traditional defenses may prove inadequate. The Romanian precedent suggests that extraordinary institutional interventions may become necessary component of democratic defense.

The decision's legitimacy ultimately depends on transparency and accountability. Romanian authorities committed to publishing detailed post-election reports documenting interference evidence, implementing enhanced protections for future elections, and engaging international partners in developing coordinated responses to hybrid threats. These commitments aim to demonstrate that annulment served democratic protection rather than partisan interests, establishing credibility essential for public acceptance and future precedent.

The unprecedented nature of the Constitutional Court's decision reflects unprecedented nature of the threat documented in this investigation. The scale of coordination across 50 countries, the sophistication of burst activity deployment concentrating 92% of posts within five-minute windows, the three-tier architecture spanning 99 Russian state-affiliated entities, and the temporal anomalies revealing centralized coordination collectively demonstrate interference operation qualitatively different from prior electoral manipulation attempts. The Court's response, while extraordinary, addressed extraordinary circumstances requiring extraordinary protective measures.

Implications for Democratic Resilience

The Romanian case provides crucial insights for democratic defense against sophisticated hybrid threats. The operation's scale—3,585 messages coordinated across 5 platforms from 50 countries, 99 Russian state-affiliated entities, 25,000 TikTok bot accounts, and 92% of activity concentrated in coordinated burst windows—demonstrates that even EU and NATO membership provides insufficient protection without comprehensive response strategies addressing platform governance, intelligence integration, civil society capacity, and international coordination.

Platform Governance Failures enabled the operation's success despite platforms' stated commitments to election integrity. TikTok's recommendation algorithm amplified coordinated manipulation for weeks before detection systems identified the 25,000-account network including 800 dormant accounts activated after years of inactivity. Facebook's infrastructure enabled 8,812 posts (92% concentrated in five-minute burst windows) to flood the information environment. Telegram's permissive policies provided persistent coordination hub resistant to disruption. These platform-level failures reflect structural incentives favoring engagement over integrity.

TikTok's role in the Romanian interference raises particular concerns given platform's Chinese ownership and relationship with Beijing government. While this operation appears Russian-orchestrated, the vulnerability it exposed—25,000 coordinated accounts including 800 pre-positioned since 2016—applies equally to potential Chinese operations. TikTok's algorithm processes user data in ways enabling sophisticated manipulation while resisting external scrutiny.

Several NATO member states have restricted TikTok on government devices, but comprehensive security response remains elusive. Platform's integration into youth culture and massive user base creates political resistance to aggressive regulation. Romania case demonstrates these concerns aren't hypothetical—coordinated operations leveraging TikTok's algorithm achieved significant electoral impact, propelling candidate from 5-10% polling to 22.9% first-round result.

Balancing security imperatives with free expression principles poses genuine dilemma. Outright bans risk appearing authoritarian and driving users to less regulated alternatives. But permissive approaches enable sophisticated adversaries to exploit platforms against democratic interests. Finding appropriate middle ground—robust transparency requirements, enhanced content moderation, restrictions on algorithmic manipulation, mandatory disclosure of coordination networks—remains urgent priority.

Effective platform reform requires mandatory transparency reporting on coordinated inauthentic behavior detection and removal; real-time information sharing with electoral authorities and security services during campaign periods; algorithmic modifications reducing amplification of burst activity and coordinated manipulation; and meaningful consequences for platforms failing to prevent large-scale interference operations. The Romanian case provides empirical foundation for regulatory frameworks, documenting specific manipulation tactics requiring platform-level countermeasures.

Intelligence Community Integration with electoral defense represents necessary evolution. Traditional separation between intelligence operations and domestic political processes reflected legitimate concerns about security services interfering in elections. However, foreign information operations targeting elections now constitute security threats requiring intelligence community capabilities for detection and response. The CSAT's role in detecting and documenting the Romanian operation—cyber attacks from 33 countries, financial flows funding interference, coordination across 99 Russian state-affiliated entities—demonstrates intelligence capabilities essential for comprehensive threat assessment.

Effective response to transnational threats requires corresponding transnational coordination. NATO Cyber Defence Centre, EU institutions, and bilateral intelligence sharing contributed to Romanian response, but coordination remained insufficient for real-time operational effectiveness. Enhanced framework should include rapid alert system for electoral interference detection; shared threat intelligence on identified hostile networks; coordinated platform enforcement across jurisdictions; joint response protocols enabling simultaneous action across multiple states; and standardized analytical methodologies enabling comparable threat assessment across national contexts. The documentation in this investigation—spanning 50 countries of origin, 5 platforms, and multiple operational phases—demonstrates scale requiring coordinated international response.

Civil Society and Media Literacy constitute essential defense layers despite proving insufficient alone. Romanian civil society organizations, fact-checkers, and investigative journalists played crucial roles in exposing interference before official acknowledgment. These capabilities require sustained support through public and private funding, legal protections enabling investigative journalism, and educational initiatives building public resilience to manipulation. However, Romania demonstrates that even robust civil society monitoring cannot fully counter state-level operations deploying industrial-scale resources documented in this investigation.

Legal and Regulatory Frameworks require modernization for hybrid threat environment. Current laws addressing electoral interference primarily focus on domestic actors and traditional campaign violations. Foreign state operations employing digital platforms, coordinated networks spanning 50 countries, and information manipulation tactics documented in this investigation often fall outside existing legal frameworks. Proposed reforms include explicit criminalization of coordinated foreign interference; platform liability for failure to prevent large-scale manipulation operations; transparency requirements for online political advertising and amplification; and international coordination on attribution standards enabling collective responses to identified state operations.

Future Threat Evolution demands anticipatory rather than reactive approaches. As defenses improve, adversaries will adapt—employing more sophisticated AI-generated content, exploiting emerging platforms before security measures develop, and leveraging new technologies like deepfakes and voice synthesis. The sophistication demonstrated in Romanian operation—800 accounts pre-positioned in 2016 for 2024 activation, 99 Russian state-affiliated entities coordinating across three operational tiers, 92% of activity concentrated in five-minute burst windows indicating industrial automation—suggests adversary capabilities continue advancing faster than defensive responses.

Particular concern centers on generative AI capabilities reducing costs of content production while increasing sophistication. Where current operations require human operators creating and deploying content, future operations might employ AI systems generating thousands of variants automatically, each optimized for specific audiences and platforms. This automation threatens to overwhelm human-dependent defense mechanisms, requiring development of AI-powered detection systems and automated response capabilities. The burst activity patterns documented in this investigation—92% concentration within five-minute windows—suggest automation already plays substantial role; generative AI will likely amplify this trend exponentially.

Democratic Legitimacy and Transparency must guide response measures to avoid cure worse than disease. Heavy-handed state interventions in information environments risk replicating authoritarian tactics democratic states seek to counter. Maintaining legitimacy requires transparent procedures for threat assessment and response; judicial oversight preventing abuse of security powers; public reporting enabling accountability; and clear distinction between protecting democratic processes from foreign manipulation versus suppressing legitimate domestic dissent.

The Romanian Constitutional Court's decision to annul elections—while legally justified based on evidence documented in this investigation—raises profound questions about democratic legitimacy in age of information warfare. When external manipulation achieves scale compromising electoral integrity, do institutions serve democracy by allowing corrupted results or by taking extraordinary action? There are no easy answers, but transparency regarding the evidence—3,585 coordinated messages, 99 Russian state-affiliated entities, 92% burst activity concentration, temporal anomalies revealing centralized coordination—enables informed public discourse about appropriate democratic responses.

The operation's failure—Georgescu did not assume presidency despite interference—demonstrates that democracies can defend themselves when institutions function, intelligence capabilities detect threats documented in this investigation, and political will exists for extraordinary protective measures. But the operation's near-success—propelling unknown candidate from 5-10% polling to 22.9% first-round victory through coordinated interference spanning 50 countries—demonstrates the margin for error remains dangerously thin. Sustained investment in democratic defense capabilities, platform accountability, international coordination, and public resilience proves essential for maintaining democratic integrity against hybrid threats that will only grow more sophisticated in coming years.

Additional Resources

The following documents provide primary source materials, research reports, and official documentation relevant to understanding the electoral interference operation documented in this investigation.